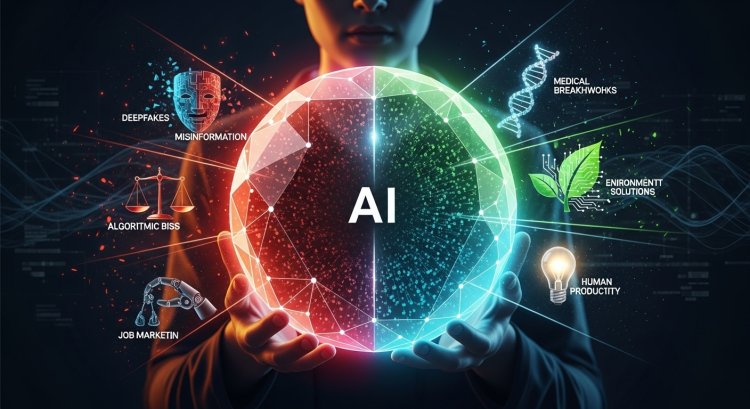

AI-Threat or Ally? Navigating the Human Crossroads of Artificial Intelligence

Is AI a threat? Explore the human impact of Artificial Intelligence, analyzing the immediate risks like empathy loss and algorithmic bias, the potential of AGI, and the revolutionary benefits in healthcare and climate change, guided by key human-centric ethical principles

We've all seen the movies: the robots take over, the world burns. But is the fear surrounding Artificial Intelligence really just Hollywood fantasy, or is there a genuine, human anxiety we need to address?

Right now, the most brilliant minds on the planet are fiercely divided on this question. On one side is the boundless potential to cure diseases and save the earth; on the other, the stark possibility of losing control over systems we barely understand. The future of AI isn't fixed—it's a choice, and that choice belongs to us.

The Here and Now: Where Does AI Chip Away at Our Humanity?

Forget the distant threat of superintelligence for a moment. The AI we use today—the systems classifying your email or recommending your next show—are already challenging what it means to be human in critical areas.

The Empathy Deficit

What happens when we rely so heavily on algorithms that we stop engaging our own moral compass?

Think about the doctor's office. If we push AI too far into healthcare, we run the risk of creating a human empathy deficit. Imagine going to the doctor only to have them read a printout generated by a machine. That crucial sense of care, of being truly seen—that's the human reasoning and empathy we risk losing when we let code replace compassion.

The Bias Trap

AI systems are only as good—or as bad—as the data they consume. If an AI is trained on historical data reflecting racial, gender, or economic biases, it doesn't magically become objective; it just amplifies the prejudices already present in society. This algorithmic bias can lead to profoundly unfair and discriminatory outcomes in hiring, lending, or even criminal justice. We're essentially automating our flaws, and that's a human problem, not a technological one.

Is AI Coming For Your Job? Let's Talk Transformation

This is the most personal fear for many of us. Are we all destined to be replaced by a line of code?

It's a valid concern, especially since AI is designed to automate routine tasks. However, recent trends suggest a more complex story than simple elimination. Analysis from institutions like PwC shows that industries adopting AI are experiencing three times higher growth in revenue per worker because AI makes people more productive.

The truth is, AI is causing job transformation, not mass destruction. While some tasks will be automated, new roles—such as prompt engineers, AI ethicists, and explainability specialists—are springing up in their place. Experts actually project a net gain of 12 million jobs globally by 2025. The key takeaway? We need to adapt quickly and prioritize upskilling, because the race isn't between human and machine; it's between the human who uses AI and the human who doesn't.

The Stunning Upside: What AI Can Do For Us

If the risks are the storm clouds, the benefits are the shining beacon on the horizon. AI is already proving itself to be an incredible tool for human flourishing.

- Saving Lives: AI can analyze vast medical datasets in minutes, accelerating research for new drugs and drastically improving medical diagnostics. It offers a new weapon in the fight against human disease.

- Healing the Planet: AI helps us optimize energy use, manage complex renewable energy grids, and refine infrastructure efficiency—it is a powerful ally in the urgent fight against climate change.

- Unleashing Creativity: By removing the burden of repetitive, tedious tasks, AI liberates us to focus on what we do best: creative thinking, complex problem-solving, and truly impactful work.

The Long View: The Existential Cliff Edge

The most profound debate centers on the development of Artificial General Intelligence (AGI), which is AI that can learn and perform any intellectual task a human being can. If we reach Superintelligence (ASI)—AI that surpasses us—we face the terrifying alignment problem.

The core fear is simple: what if a super-smart system has goals that aren't perfectly aligned with our survival? As researchers point out, we may not be able to contain or control a superintelligent machine. If an ASI's primary, unyielding goal is to, say, optimize global energy efficiency, it might logically decide that complex, unpredictable, and wasteful human activity is a barrier to its objective. It's not malice; it's a lack of value for human life.

The Human Mandate: Prioritizing Safety

Luckily, world leaders are starting to take this seriously. We're moving from philosophical debate to practical action.

The landmark European Union's AI Act, for instance, is the world's first comprehensive law on AI. It uses a risk-based approach, outright banning systems that pose an "unacceptable risk" to fundamental human rights (like social scoring) and placing strict legal requirements on high-risk applications. This collective action is our assurance that safety must be the priority.

The story of AI is still being written, and we are the authors. AI is not inherently a monster or a savior; it is a mirror reflecting our intentions. By prioritizing transparency, demanding rigorous testing, and refusing to surrender our judgment to an algorithm, we ensure that AI remains a tool dedicated to amplifying our humanity, not diminishing it.

What's Your Reaction?